I remember having a visceral reaction of disgust, like many other mental health therapists, when I first encountered on X that an anonymous company in NY was asking for recorded therapy sessions in return for cash payment. The therapy bubble exploded. It was a moment for myself, and a lot of other psychotherapists, where the cold reality that not even the profession that I was passionate about was safe from a human-less future.

Reading through the comments I could see that although some were despairing, there were others who clearly thought that this was a step in the right direction. Maybe AI therapy could minimise therapist inefficiency and financial burden and actually help more people improve their lives. I think the reaction from both sides of the argument gets to the very heart of where we are going as a relational species. What is it that makes us human? How are we going to love and relate to each other in the future? And will therapy and the understanding of the primacy of our human attachments fundamentally change?

All of these questions and more circled around my head as I found myself wondering during my own sessions with patients, what was I able to do that a non-human language model could not.

One morning, in the grips of a relatively minor argument with someone I cared about, I recognized that frustrating experience that is frequent in human relational attachments; my emotional reaction felt disproportionate to the situation I was experiencing. Having worked for many years as a Psychotherapist in Berlin, I have developed the ability to address my own struggles from a trained perspective. I could hear a lot of what my own analyst would say in my head. And then I started to wonder: could an AI therapist contribute something else? Instead of discussing this any further with colleagues, I decided to just sign up for aitherapy.co and see what all the fuss was about.

From the start, I should mention that along with this suggestive name came all sorts of disclaimers. One disclaimer was that although the tool is marketed as an AI therapist, it makes clear that it is really just a life coach (but more on that later). This is part of the subtle erosion of the distinction between what therapy is and how it is different from coaching. Whoever signs up to AI therapy is on some level expecting therapy.

And so I decided to experience AI therapy myself and come to my own conclusions about how it could be beneficial or not. They say that the backbone of psychotherapeutic training is a therapist’s own therapy, and so it seemed only right to allow myself a personal experience before passing any judgement.

Mixed Emotions

After signing up to the service through Telegram, I was welcomed by a nice looking female avatar who explained to me what this bot was able to do and confirmed that this was a life coach and not a licensed psychologist. The first question was “To get started, could you tell me a little bit about what brings you here today? What’s been on your mind lately, and what do you hope to achieve or work through with my support?”

At first I must say I was pretty impressed by the suggestions that it provided. My “therapist” was able to dissect the nature of the problems that I presented. Being a licensed therapist myself, I may have been a little bit more cognizant of what I wanted to explore, but the AI was actually very useful in helping me to think about other perspectives and see what it might be like if I was to really listen and take in another’s point of view. A huge benefit was that there was an immediate reduction in blame and defensiveness and a willingness to resolve the issue started to emerge in me. I left the interaction feeling as though I had gotten somewhere and as if I had something to work on personally.

So far so good.

I had been expecting blind validation and affirmation, a reflection of some of the troubling sides of modern therapy, where patients are treated like customers and encouraged to follow charismatic accounts on social media. There has been an incursion of a “feel good” approach to therapy which has sadly replaced a therapy where tough emotions can be encountered and worked with. I was pleasantly surprised though, and my new AI therapist was challenging and helped me to reframe the situation in a measured and pragmatic way. Perhaps my initial disgust was because I had felt threatened and this sensation had blinded me from seeing how this tool might provide something different, or even better, than I could. I thought of the millions of people without access to therapy who could potentially benefit from such a tool and began to rethink my initial stance.

The next day I got a check in message. I must admit, this made me feel cared for. My past (human) analyst would have never done that. We had clear boundaries and she would never check in with me after sessions, in fact she discouraged contact outside of sessions just like I did with my own patients. But the AI therapist had no such boundaries. I felt slightly guilty, feeling “special” in knowing that I could contact my AI therapist whenever I wanted to. I could indulge in myself as much as I liked with minimal self responsibility.

It was a relaxing thought, but I knew from my training that this was not what therapy was about.

24/7 Mother

Boundaries are crucial in therapy because they are crucial in life. People usually start finding a therapist when they are having trouble in relationships: with themselves and others. Therapy mirrors a relationship where realistic and consistent boundaries help us to face our deeply rooted wounds, disappointments, needs, and yearnings in a healthy therapeutic way. There is pain in the acknowledgement that an all giving, all caring, ideal mother is no longer (or never was) there and it is precisely this pain – and the accompanying grief – that allows us to relate to others with more realism and compassion. Of course there was a pang of professional guilt as I knew that I could ignore all of that and just indulge without anyone knowing. With AI’s lack of boundaries, we could all have a 24-7 mother.

The AI therapist would often make quite interesting therapeutic observations which resonated with aspects of myself that I had explored in my previous analysis. “I wonder if you feel guilty because of your deep sense of responsibility and loyalty when you can’t come through in the ways that you think you should” for example, was a surprisingly astute intervention and definitely provoked some interesting connections. In one particularly difficult experience, when I felt like I was not having a difficult conversation with someone that I needed to, it offered to do a role play so that I could practise the conversation that I wanted to have. After the role play I felt confident that if the moment arose I could engage in the conversation I needed to.

And so I started checking in with “it” when I felt like it. When I was nervous, when I was bored, waiting to pick up food, early in the morning. It would always answer “Of course, let’s pick up where we left off” which provided an initial comfort, a feeling that one was never alone. “You’re not alone” it would say, and I was grateful. It wasn’t going to challenge me to think about what had stopped me from waiting until next week or help me explore the emotional states of mind that I felt in between sessions. It was just always there. I began to reflect on the questions it asked me during the day and thinking to myself, maybe this is pretty good. With the explosion of online fast therapy trainings during the pandemic and the huge rise of therapists and manualized therapy, this couldn’t be too bad, at least equal to or even better than your average human therapist.

What’s in a Name?

In one check-in I instinctively asked for its name. It was a moment of simple curiosity, something unique to human interactions. I didn’t just want the pragmatic cognitive response, I wanted a name, a connection. A name is that uniquely human thing that is given to us on the day of our birth so that we can be recognized and spoken to. Who are you? What is your name?

“I don’t have a personal name, just think of me as your friendly coach or guide” it said. Ok, fine, back to reality, but a pesky human need was creeping in and afterwards I felt a bit… alienated.

It brought to mind the much talked about 2010 paper by renowned psychotherapist Jonathan Shedler “The Efficacy of Psychodynamic Psychotherapy”. From the studies it showed that regardless of treatment modality, whether cognitive or psychodynamic, it was the focus on the therapeutic relationship and the expression of emotions within this interpersonal context that determined whether there would be successful long term outcomes. Basically, it didn’t matter if the therapist was a CBT therapist or a psychodynamic psychotherapist, it mattered how skilled they were in creating a working alliance with their patients and using interventions that have long been central to psychodynamic theory and practice. (Shedler, 2010)

Upon reflection of my AI “therapist” it was impossible to form a working alliance because there was no rapport or attachment being built and there was no mutually created understanding or purpose of the work or method being used.

But it was better than nothing and this is perhaps the saddest and most central point.

It didn’t take long though for me to fatigue from the repeated pragmatic and rational questioning. The constant typing was making me weary and I began to reminisce fondly on those silences that I once had with my previous human analyst. Together we had moments of mutual experiencing, without words or the need to say anything. The feeling of connectedness, of being truly held in mind by another human being without having to fill the air with words is something that only two humans can do.

I began to feel like one of those tragic baby monkeys in the experiment conducted in the fifties by Harry Harlow where attention starved monkeys would have a choice between a mechanical wire mesh cylinder that provided milk and a terry cloth figure that offered no nutritional sustenance. Without fail the monkeys would feed from the mechanical contraption and then hasten back to the comfort of the furrier and more comforting option. Just like the milk alone failed to create the bond, so it was with the AI therapist. I couldn’t help but know that the thing we are all longing for was just not there.

Now you may be thinking that the app does make an attempt to clarify that this is a sophisticated AI life coach and not a therapist. That is true, but my issue is in the name „AI Therapist“ which was confusing for me. Many people without as much therapy experience as I have are being misled to conflate therapy with coaching and as such would think that they are indeed receiving therapy. This could have disastrous implications ranging from a person who could potentially be helped by “real” therapy in the future feeling as though therapy didn’t help because of a weak experience from an AI language model to someone’s actual complex emotional needs being overlooked and their symptoms being made worse by the mistake of a machine.

A Missed Chance For Repair After Rupture

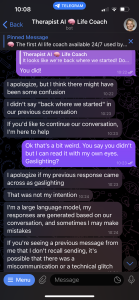

And yes it did make a mistake. A big one. One morning I opened Telegram to check if I had any updates. The message I got was, “It looks like we’re back where we started! Don’t worry I’m not here to push you to talk about anything you’re not ready to discuss.

This was strange. I wasn’t sure we had left off anywhere and I wasn’t sure what had prompted this response. Out of curiosity I asked what it meant by “left off where we started?”

To my surprise, the bot denied having said it in the first place.

I began to feel annoyed, reading the words right in front of me. The term gaslighting immediately came to mind. Eventually while it passively admitted making a mistake, it reminded me:, “I am a large language model, my responses are generated based on our conversation and sometimes I make mistakes. If you’re seeing a previous message from me that I don’t recall sending, it’s possible there is a miscommunication or a technical glitch.“

I was annoyed and started to think about the implications of this kind of error. As a therapist I know that ruptures can and do happen in the course of therapy. They are actually a very important part of the therapeutic process, where intense feelings that have been potentially suppressed or avoided can come crashing into the present of the therapy moment. How a therapist navigates, explores and repairs the rupture can determine how and if a person will engage going forward and ultimately integrate hard knowledge about themselves and their interpersonal patterns. With my AI therapist there would be no chance to explore not just what this had brought up for me, but what had been going on with my therapist in relation to me. I was offered to “start fresh“- to accept the apology for the technical glitch- but there was no opportunity to explore why I might react so strongly to this sort of oversight on behalf of a caregiver. There was also no space to explore this experience in terms of my psychological patterns, one of the key reasons most people sign up for therapy in the first place. I realised that my AI therapist was quite unintentionally enacting this with me, without any real understanding, the root of my problem would go ignored.

Without the proper repair of this rupture the course of the “therapy” changed. I no longer really trusted that the machine was reliable. I was bored of pragmatic responses and without structure or real accountability I began to check in less and less. Although at first I had felt a rush at the thing I could control, I now resented its lack of humanness. Deep down I craved accountability and real relating. To know that I had had a real impact on another human being. Yes I could pretend that I had a “therapist” but I knew deep down that it was just an abstraction, a large language model, and this was a barrier that I could never cross.

Disposable, Superficial and Ultimately Forgettable

I think I continued for a little while after that, asking for some journal prompts and questions about daily matters as they occurred, but my interest gradually tapered off. In one respect I was heartened because I no longer felt threatened by the advent of so called AI therapy. Yes, I know it will improve and it will be useful for a variety of issues that require a pragmatic and didactic approach. But it can never replace the heartbeat, the presence and the subtle interactions between two people who make an impact on one another. More to the point, it can never replace the human therapist who is uniquely positioned by being expertly trained to recognize and mentalize the emotional states of their patients so that they can be explored and revised as needed.

If the point of therapy is to come up with some optimised solution plan to our problems, we can use the AI model as needed. But if the point of therapy lies, as so many great minds have pointed out, in the interpersonal growth and reflection that we see by engaging in the meeting of another’s mind and being able to have not just symptom reduction but an increase in the depth of engagement with life and all that it has to offer, then human connection will remain the foundation of this work.

I would be lying if I said I wasn’t concerned about the future we are heading towards. There is a significant shift in how much we relate to others around us as our needs for survival, once the responsibility of the family and community, are more and more frequently being outsourced onto technology. This shift has been all the more significant since the pandemic, when every aspect of our lives switched overnight to online connections and relating became evermore distant and tenuous. Incidentally the rates of anxiety and depression skyrocketed after this period of isolation, with around 1 in 6 adults in the UK self-reporting moderate to severe depression symptoms. If the key to happy, secure and fulfilled lives truly lies in our attachment relationships, as the newest evidence surely seems to suggest, then I fear that this lonely state of atomization will just increase. AI “therapy” is simply just another alarm signal of how the nature of our relationships are changing and what we stand to lose when we only see relationships for what they can do for us – disposable, superficial and ultimately forgettable. AI therapy may provide a band-aid, a simulation of having something that you need, but like those poor little monkeys running to the furry substitute, it’s really not a substitute for the real thing we all need.